This article includes brief implementation details, aims and goals of the 2D Carla environment we shared. You can check it out here.

Simulations are important parts of self driving cars on research. Simulations makes it easy to develop and evaluate driving systems. We see that researchers uses the simulations for training reinforcement learning models to drive, creating driving datasets and transferring the learned knowledge to real world [1].

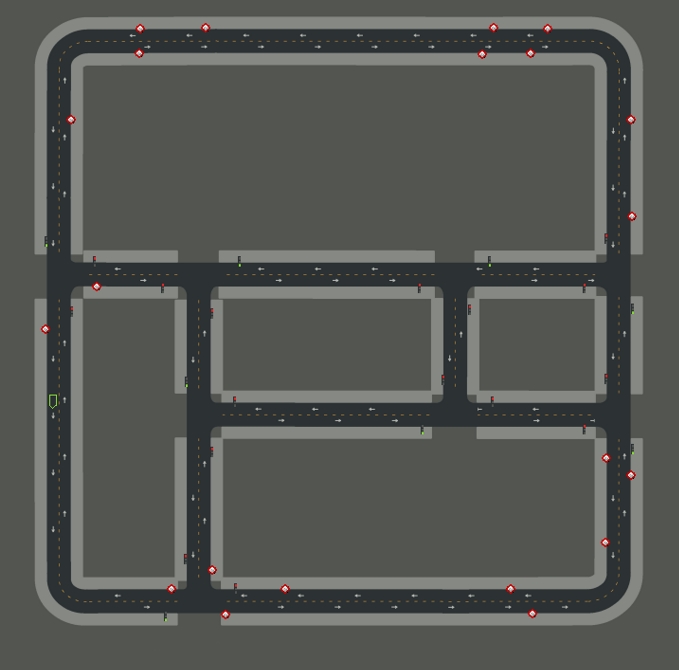

CARLA [2] pincludes different sensors like simple camera, semantic segmented camera in Cityscapes palette, LIDAR etc. It has a nice python API that helps to control cars and environment also helps to create different scenarios. Also it has various maps from simple towns to highways. We chose a simple town for the beginning. The map of the town shown in figure 1.

Fig. 1. The map of the town used as main environment.

It includes junctions, curved roads and separated roads by lines. The map also has spawn points to spawn cars properly on the road.

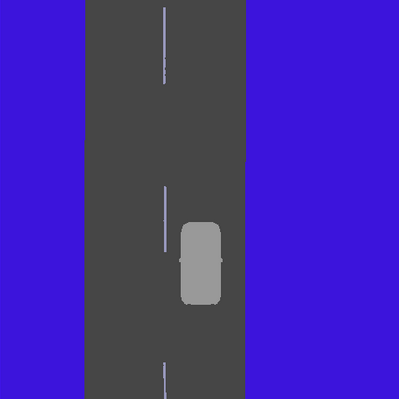

CARLA does not support a 2d sensor. So we put the camera sensor on top of the car to see as bird’s eye view (Fig. 2.). The front part of the car has 3/2 of image and the back part 3/1. The camera always follows the car even the rotations of the car applied to camera. Thus the car always looks same way through.

The camera is an object of environment and it see the poles. The poles corrupt the image we get from camera sensor. In this phase we do not need poles so we remove them from the map to fix that issue. With these justifications we have a clear 2d view from a camera sensor.

The camera sensor isn’t that simple for a model to learn drive end-to-end from scratch even with 2d view. There are lots of objects needs to be detected by model. We use the semantic segmentation sensor on the same position of the camera sensor. In the default sensor there are 12 classes. We reduced it into 4 classes. These are road, lines, out of road and car. The semantic segmented image showed in figure 2.

Fig. 2. Birds eye view of the car with RGB camera (left) and the semantic segmentation (right)

We select a short, small car (Mini Cooper replica). We chose a small car because roads are tide in this map, it takes less space in the road so there are more space to make a maneuver. The car is the agent of our models. It has only 3 actions we set. These are coast, left and right move with half degree. The velocity of the agent is static and its 20 kmh. With these speed and moving it can easily turn corners and junctions. There is no break as action so it keeps moving. The environment don’t have any pedestrian or cars to traffic. We did not look for any traffic rules except keeping in the right lane.

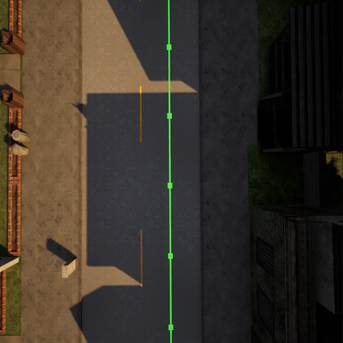

Reward mechanisms are important part of the RL environments. Our main goal is the keep the car in the center of the road. From the CARLA api we can get the closes waypoint in the center of the road. We see how we get the center of the road (green line) in the left image of the figure 3. The ultimate goal is the follow the green line but we are not giving the green line in the observations. We calculate a reward based on the distance between green line and car.

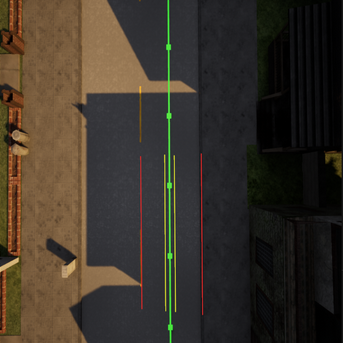

Fig. 3. Birds eye view of the car with RGB camera sensor (left) and the semantic segmentation sensor (right).

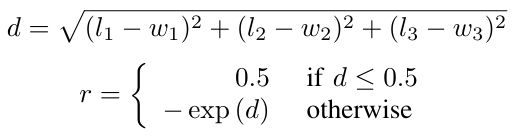

The yellow area on the figure 3 represents the positive reward area. The red lines represent the not allowed area to drive. If the car collide with any point of the red are the episode will stop. If the car location is in this area it gets positive reward else it gets a negative reward based on the distance. Let suppose car location as l, the closest waypoint is w. So we can calculate the reward function with the following equations:

Finally with the reward we built complete reinforcement environment to train models. We have observations from sensors, the agent with actions and the reward from the actions in an observation. The environment has some problems like not identifying the car location and stuck at same points. We overcome from these issues with comparing sequence of observation with each other to detect stuck. Also there is a maximum step count that will stops the episode and resets the environment. With these components we can build, train and evaluate different kind of Reinforcement Learning models on this environment.

References

- Janai, J., Güney, F., Behl, A., & Geiger, A. (2020). Computer Vision for Autonomous Vehicles: Problems, Datasets and State of the Art. Foundations and Trends® in Computer Graphics and Vision, 12(1–3), 1–308. https://doi.org/10.1561/0600000079

- Dosovitskiy, A., Ros, G., Codevilla, F., Lopez, A., & Koltun, V. (2017). CARLA: An Open Urban Driving Simulator. Proceedings of the 1st Annual Conference on Robot Learning, 1–16.